Insights & Articles

What We Learned at Confluent's DSWT Sydney: Real Streaming Stories That Matter

Last week, the Cloud Shuttle team joined hundreds of data professionals at Confluent's Data Streaming World Tour 2025 – Sydney, a key stop in a global series focused on the future of real-time data. From financial services and agriculture to government and banking, Australian and New Zealand organisations are going beyond batch processing and rethinking how data flows across systems. This blog distills the most valuable lessons we took away from the event - covering the shift from batch to real-time, case studies from ASX, Bendigo Bank and LIC, deep dives into TableFlow and Apache Iceberg, and what all of this means for your own data architecture, costs, and future planning.

Peter Hanssens

May 09, 2025

Breaking the Schema Barrier: Our Hands-On Experience with TableFlow for Data Contracts

In modern data ecosystems, tracking schema changes and maintaining data contracts is a persistent challenge. At Cloud Shuttle, we've been exploring TableFlow as a solution for improving visibility into schema evolution, particularly in event-driven systems. This blog shares our early insights using TableFlow across different client environments from fintech to logistics, and how its focused approach to schema governance is helping bridge the communication gap between data producers and consumers. Discover how this lightweight tool is addressing real pain points without overcomplicating your data stack.

Peter Hanssens

Apr 17, 2025

Exploring ClickHouse BYOC on AWS: Performance and Control Without Compromise

ClickHouse's Bring Your Own Cloud (BYOC) capability represents a significant shift in how organizations can deploy high-performance analytics platforms while maintaining infrastructure control. This semi-managed model allows teams to keep data and compute resources within their own AWS environment while ClickHouse handles orchestration through a centralized control plane. Learn how this approach addresses critical pain points around security, cost optimization, and flexibility, and why Cloud Shuttle believes this deployment model will become increasingly relevant for data-intensive industries that require both performance and sovereignty.

Peter Hanssens

Apr 15, 2025

How Mage's PySpark Integration is Supercharging Our Data Engineering Capabilities

Mage Pro's seamless PySpark integration has transformed how Cloud Shuttle delivers data engineering solutions. Learn how this powerful feature has streamlined our development process, accelerated project delivery, and helped us achieve an 85% reduction in processing time for one of Australia's largest energy retailers.

Peter Hanssens

Apr 14, 2025

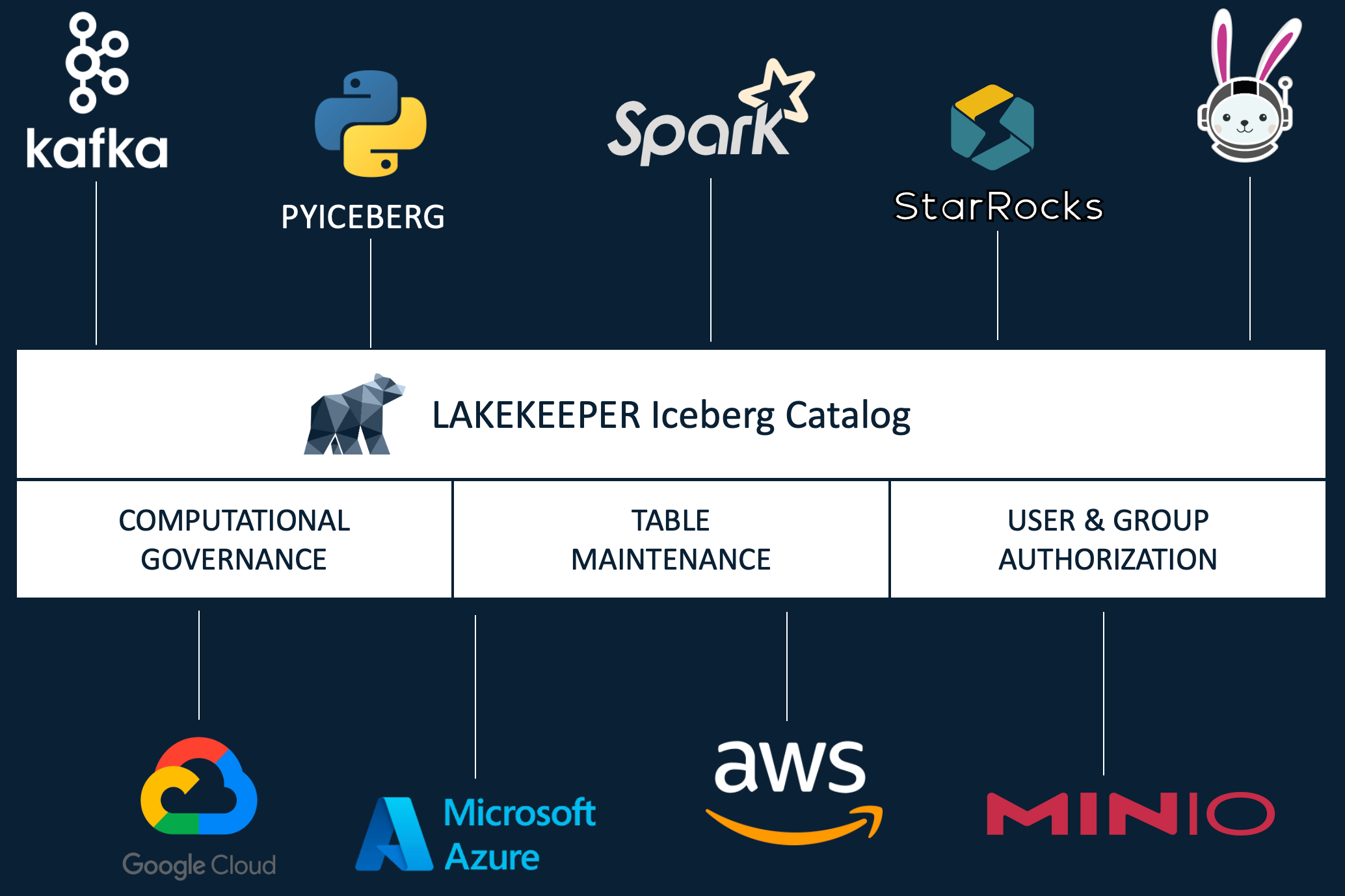

Getting started with Lakekeeper and Trino OPA (Data Governance)

Modern data platforms require strict governance to maintain compliance and security. This guide demonstrates how Trino query engine and Lakekeeper catalog can be integrated to achieve data governance in your data platform. Trino respects access policies defined at Lakeeper catalog level.

Peter Hanssens

Mar 03, 2025

Breaking into Data Engineering

The Cloud Shuttle Graduate Data Engineering Workshop is a 5-day paid training program designed to help recent graduates, final-year students, and career switchers break into data engineering. Created by an industry veteran with 15+ years of experience, the workshop addresses the growing demand for data engineers (with a 30% CAGR since 2019) by providing hands-on, practical experience that universities often lack. Participants learn essential skills like building ETL pipelines, working with cloud platforms, and using tools like Python, SQL, and Airflow. The program also includes career preparation support and aims to bridge the gap between theoretical knowledge and real-world job requirements, backed by success stories of graduates landing positions at companies like TikTok and major consultancies.

Peter Hanssens

Feb 21, 2025

Why Data Products are the Future of Data Engineering in Australia?

Data products are revolutionizing data engineering in Australia, marking a shift from traditional ETL pipelines to more sophisticated, product-centric approaches. As evidenced by success stories from Latitude Financial, Newcrest Mining, and Woolworths, Australian organizations leveraging modern tools like Backstage, Mage, and Apache Iceberg are seeing significant improvements in their data operations. McKinsey reports that companies adopting a data-as-a-product mindset are 2.5 times more likely to excel in data-driven decision-making, highlighting the transformative potential of this approach. This evolution is enabling faster deployment, enhanced team collaboration, improved data quality, and reduced technical debt across various sectors in the Australian market.

Peter Hanssens

Jan 25, 2025

Streamlining Data Migration for an Energy Giant

Cloud Shuttle successfully transformed a leading energy company's data infrastructure by migrating from Matillion to a modern stack using Snowflake, dbt, and Airflow. In just five months, our team of 2.5 FTEs migrated over 300 models while ensuring zero disruption to business operations. The project included implementing automated orchestration, establishing S3 as a handoff point, and conducting thorough regression testing throughout the migration. This strategic modernisation resulted in a 30% increase in operational efficiency, enabling real-time data accessibility and empowering teams with faster, more reliable data processing capabilities for improved decision-making.

Peter Hanssens

Oct 24, 2024

Driving Data Transformation for a Leading Insurance Provider

When a leading insurance provider found their legacy SSIS systems creating bottlenecks and driving up costs, Cloud Shuttle stepped in with a transformative solution. By migrating over 100 SQL models from SSIS to dbt on Snowflake, we helped modernize their entire data infrastructure with just one FTE. The migration eliminated data silos, simplified ETL processes, and reduced infrastructure costs while providing seamless cross-product insights. This strategic transformation not only solved immediate operational challenges but also positioned the client's data infrastructure for future growth and innovation in the competitive insurance landscape.

Peter Hanssens

Oct 02, 2024

GraphSummit Sydney 2024 recap: Innovations from the frontier of Data and AI

Neo4j's GraphSummit World Tour 2024 brought together 150 industry leaders in Sydney to showcase how Australian and New Zealand organizations are leveraging graph technologies with AI to solve real-world challenges. Highlights included Commonwealth Bank's unveiling of GraphIT, their network infrastructure digital twin, SparkNZ's innovative use of graph databases for RFP automation, and McKinsey's sobering perspective on GenAI implementation challenges. The summit featured practical workshops on architecting graph applications and enabling GenAI breakthroughs with knowledge graphs, while Cloud Shuttle demonstrated our DataEngBytes chatbot powered by Neo4j, LangChain, and Amazon Bedrock. As graph databases continue to evolve, the event highlighted their growing importance in everything from supply chain optimisation to drug discovery.

Peter Hanssens

May 13, 2024